Research institutions alongside businesses must deploy Web scraping software to obtain relevant online data that businesses can apply within their operational frameworks. These tools help users retrieve website data and drop valuable asset needs while speeding up the total processing time. Selecting optimal web scrapers for 2025 fulfills present market requirements by creating evidence-driven choices for competitive market assessments and new prospect identification. The web scraping solutions will unite two fundamental components that integrate AI data parsing technology with cloud computing management and industry data protection guidelines.

Scrapy and BeautifulSoup together with other web scraping tools including Octoparse, ParseHub, Bright Data, Apify, and WebHarvy will remain at the top of the tool selection for 2025. Web data parsing tools extend their capabilities from basic web data acquisition to operating automated data collection functions. A comprehensive approach to web scraping tool selection involves assessing usability and conducting cost evaluation while simultaneously measuring flexibility for different data template requirements.

What are Web Scraping Tools and Its Importance?

Automated data scraping contains all essential software applications that users need to execute their data extraction tasks. Programs gain website information acquisition capability through Cloud-based web scrapers which convert the acquired data into operational formatable models. Users obtain structured web information from software that is able to generate both CSV and JSON files and database formats. Specific HTML document content that meets program-defined criteria is the only data that HTTP requests obtain through the programs.

Enhanced scraping instruments enable users to gather dynamic website content with their machine learning and artificial intelligence features that also bypass CAPTCHA and antibot security mechanisms. A specific set of web crawler software remains essential in contemporary digital operations because they serve multiple essential purposes.

- Market Research & Competitive Analysis software – Web scraping API enable businesses to track their competitors’ pricing along with their products and customer attitudes.

- Lead Generation –Organizations retrieve contact details by extracting them from business directories as well as social platforms.

- E-commerce & Price Monitoring –Sales establishments use price monitoring tools to modify their marketing approaches.

- News & Content Aggregation– The gathering of recent news data happens through media organizations.

- Financial Data Extraction – Stock prices and cryptocurrency market developments as well as financial reports are extracted by investors through the use of scraping methods.

- AI & Machine Learning Training – AI model training depends on datasets that data scientists obtain through scraping operations.

Web scraping for analytics serve as essential decision-making aids for data gathering because data has become the foundation of operational decisions.

Key Benefits of Web Scraping Tools

- Automation & Efficiency – The system reduces the need for manual data collection operations which saves labor and time resources.

- Real-Time Data Access –Businesses maintain current information about market developments and pricing through this system.

- Scalability – The system manages enormous amounts of data extraction operations in several sources at once.

- Customizable Data Extraction –Users have the ability to create virtual boundaries for extracting particular data points.

- Cost-Effective – The automation process lowers costs since it replaces human analysts who were previously needed for data collection.

- Improved Decision-Making – Company decisions make better use of data insights obtained in real-time.

15 Best Web Scraping Tools

1. ParseHub

The web scraping tools application ParseHub operates as a simple tool for extracting website data through its codeless features. The program renders JavaScript and works with AJAX to handle modern web applications thus making it an ideal scraping solution. Users benefit from running scraping tasks through the tool’s automated features which are stored in the cloud interface.

Users at any skill level can use ParseHub because it offers a visual interface that is both easy to understand and navigate. This tool enables easy data export through various formats which include JSON, Excel and CSV so businesses can seamlessly add scraped information to their applications.

Key Features:

- No-code interface with a visual workflow builder.

- Handles AJAX, JavaScript, and pagination.

- Cloud-based storage with automation options.

Key Purpose:

- The tool works best when companies and researchers need to extract information from interactive websites in e-commerce and travel networks along with social media platforms.

Price: Free plan available; paid plans start at $189/month.

Website: www.parsehub.com

2. ScraperAPI

The web scraping tools software ScraperAPI combats anti-bot protections through a combination of rotating proxies and it solves CAPTCHAs together with geolocation targeting abilities. This tool helps simplify large-scale web scraping tasks through an API that resolves typical problems that include IP bans.

The tool serves businesses that need to gather large data quantities while exempting them from IP block restrictions. The scalable structure of ScraperAPI allows multiple simultaneous requests to complete data extraction tasks for handling millions of monthly queries effectively.

Key Features:

- Rotating proxies and CAPTCHA-solving.

- Geolocation targeting for region-specific scraping.

- High-speed API with scalable infrastructure.

Key Purpose:

- Businesses and developers who require massive secure data retrieval without proxy management should use this service.

Price: Starts at $49/month.

Website: www.scraperapi.com

3. Scrapy

As an open-source data extractor, Scrapy serves Python developers specifically. Scrapy presents developers and data scientists with a quickly scalable toolkit that facilitates the development of web crawlers. The application provides in-built data processing pipelines that help users efficiently structure their stored data. Businesses can customize Scrapy through its modular structure, which makes it a superior option for solution-specific requirements.

Key Features:

- Open-source, customizable, and Python-based.

- The system includes built-in middleware elements and data processing pipelines.

- Supports large-scale web crawling.

Key Purpose:

- Data scientists, alongside developers, should use this tool to extract structured information from various sources when building analytical and machine-learning models.

Price: Free (open-source).

Website: www.scrapy.org

4. Octoparse

Octoparse creates web scraping tools software specifically for users who lack programming abilities to extract data with its drag-and-drop interface that needs no code. This platform enables users with minimum technical skills to extract structured and unstructured web data between websites. The tool enables automation functions and scheduled scraping operations as it allows execution through cloud systems.

The scraping tool Octoparse enables users to handle AJAX and login authentication systems with pagination for dealing with dynamic content found on modern websites. This tool comes with strong data transformation and export capabilities which allow smooth business workflow embedding.

Key Features:

- No-code visual scraper with a drag-and-drop interface.

- The tool provides functionality for AJAX and dynamic content management and authentication features.

- Users can conduct scraping operations through automated scheduling on cloud-based systems.

Key Purpose:

- This tool provides an accessible web data collection system that suits all businesses, marketers and researchers.

Price: Free plan available; paid plans start at $89/month.

Website: www.octoparse.com

5. Bright Data

Bright Data serves as an enterprise-level web scraping tools solution that delivers proxy solutions to enable large-scale data extraction. The solution delivers secure ,anonymous web scraping services through a wide network of residential mobile and data center proxies.

Due to its real-time data collection capabilities and sophisticated scraping features Bright Data attracts enterprises that perform competitive research as well as market price monitoring and market research. The platform has ethical scraping policies that follow legal requirements.

Key Features:

- The proxy solution provides access to proxies that exist in residential properties and mobile networks along with data centers.

- Real-time data collection with high anonymity.

- Ethical scraping with compliance management.

Key Purpose:

- Corporations together with research organizations find this tool optimal for massive fast data retrieval while maintaining both security and anonymity.

Price: Custom pricing based on usage.

Website: www.brightdata.com

6. Diffbot

Diffbot serves as an artificial intelligence tool that operates through the automatic conversion of web data from unstructured formats to structured formats. Through machine learning analysis, the platform extracts meaningful data from web pages, which makes it suitable for businesses dependent on data.

Business users rely on Diffbot to gather web data that supports market research activities competitive intelligence needs, and business analytics purposes. Users can easily gain access to structured, high-quality data because this tool successfully decreases manual labor via automation.

Key Features:

- AI-driven automatic data extraction.

- The system converts pages without structure into data with a clear organization.

- Cloud-based API for seamless integration.

Key Purpose:

- Businesses that need NLP-enabled data extraction with market intelligence capabilities and advanced analytics functions should consider this platform.

Price: Custom pricing based on API usage.

Website: www.diffbot.com

7. ScrapingBee

ScrapingBee operates in the cloud and specifically targets the web scraping tools of websites that contain heavy configurations of JavaScript. The solution enables users to extract information from dynamic web pages using its headless browser functionality which prevents blocking.

ScrapingBee delivers smooth data retrieval through its rotating proxy feature alongside automatic CAPTCHA solutions. The platform makes itself best suited for developers along with businesses that need large-scale automatic web scraping solutions.

Key Features:

- Headless browsers enable the tool to handle content delivered through JavaScript.

- Built-in proxy rotation and CAPTCHA-solving.

- The API provides web scraping functions that operate at high speeds and can expand to meet requirements.

Key Purpose:

- This tool works very well for developers scraping websites that have heavy JavaScript content, such as social media sites and e-commerce platforms.

Price: Plans start at $49/month.

Website: www.scrapingbee.com

8. Common Crawl

The web scraping tools Common Crawl presents its open-source web data archive free of charge for research applications and analysis. This platform provides extensive datasets companies can use for AI learning as well as searching opportunities and running large data operations.

The extensive web snapshot database known as Common Crawl enables the efficient execution of both text mining and natural language processing (NLP) projects by researchers and organizations.

Key Features:

- Open-source archive with petabytes of web data.

- The system provides engineered web snapshot records designed for natural language processing and data extraction operations.

- Free access to large-scale web crawling datasets.

Key Purpose:

- The platform best serves those who perform extensive web data analysis research, as well as data scientists and AI developers.

Price: Free.

Website: www.commoncrawl.org

9. Scrape-It.Cloud

Scrape-It.Cloud utilizes a cloud platform through its easy-to-use API to provide scalable data extraction capabilities for users. The web scraping tools provides three essential functions: rotating IPs automatic CAPTCHA handling, and automatic scheduling for continuous data retrieval. The platform enables business users to extract current data from different sources suitable for financial e-commerce and market research applications.

Key Features:

- A web scraping solution based on Application Programming Interface technology implements IP address rotation.

- CAPTCHA-solving and automated scheduling.

- Cloud-based infrastructure for scalability.

Key Purpose:

- The platform serves customers who require data scraping operations with automation and scalability for analytics and intelligence purposes.

Price: Starts at $29/month.

Website: www.scrape-it.cloud

10. Scraping Dog

Scraping Dog offers businesses a speed-efficient tool that delivers live data extraction services. Scrapingdog implements proxy rotation as well as JavaScript execution and geolocation targeting features for accurate and large-scale scraping operations.

The web scraping tools Scraping Dog delivers high-speed data collection functions that suit companies requiring regular automated data acquisitions from e-commerce platforms and business directories.

Key Features:

- Real-time scraping with proxy rotation.

- Supports JavaScript execution and dynamic content.

- Geolocation targeting for region-specific data.

Key Purpose:

- Businesses that require instantaneous data extraction for price monitoring, together with market intelligence, should use this system.

Price: Plans start at $50/month.

Website: www.scrapingdog.com

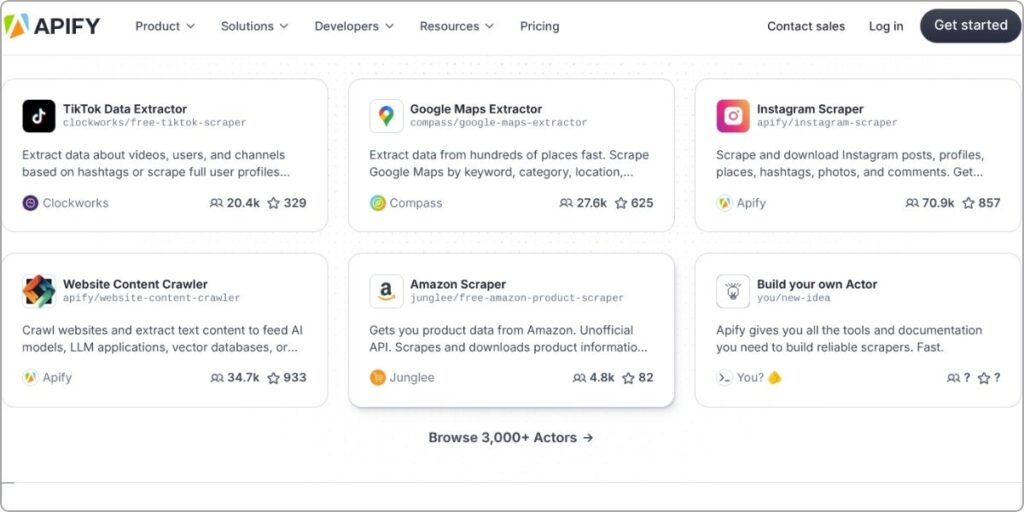

11. Apify

Apify functions as a flexible web scraping tools solution that gives programmers the ability to configure scalable web crawlers together with data extraction solutions. Cloud-based execution powers the tool while it connects to multiple APIs and enables automation. Data-driven business decisions become possible because this tool offers structured extraction of data suitable for e-commerce real estate and social media usage.

Key Features:

- Scalable web crawlers with cloud execution.

- API integrations for automated workflows.

- Customizable scraping solutions.

Key Purpose:

- Businesses together with developers should choose this tool because it provides robust automated cloud scraping capabilities.

Price: Free tier available; paid plans start at $49/month.

Website: www.apify.com

12. Mozenda

Mozenda delivers web scraping tools functionality via no-code software that enables users to extract data using a user-friendly visual interface. This tool enables organizations to carry out automated web scraping procedures and make data integration within their operational workflows.

Mozenda provides its users with data management tools together with analytics capabilities which results in clean extracted data that can be converted into CSV, JSON and XML formats. Mozenda possesses a premium scraping engine that operates on dynamic content, thus enabling effective data collection from e-commerce platforms news outlets and financial documents.

Key Features:

- Point-and-click interface for easy data extraction.

- Mozenda provides users with automated scheduling in addition to cloud storage integration tools.

- Mozenda offers an advanced functionality that includes data cleansing features alongside CSV, JSON and XML export capabilities.

Key Purpose:

- Mozenda serves as an optimal solution for enterprises that require data extraction from three major categories, including e-commerce platforms financial reports and news portals.

Price: Starts at $250/month.

Website: www.mozenda.com

13. Import.io

Using Import.io as a web scraping tools allows users to create structured datasets by converting web data into clean formats easily. Users can create scrapers directly through a graphical interface, which enables both novice and expert users to work with it. The tool performs real-time extraction of data which maintains business readiness for market analysis as well as price monitoring and lead acquisition insights.

The API integration feature of Import.io enables users to automate their data collection and expand their data processing capability. Users can extract data from websites with JavaScript code even when dealing with highly complex webpages, as the tool operates within data privacy restrictions.

Key Features:

- The system enables users to create scraping programs without writing code using its visual interface.

- Real-time data extraction and API integration.

- Handles JavaScript-heavy websites for accurate scraping.

Key Purpose:

- The automated service of Import.io enables companies to access real-time web data extraction for market research and lead generation tools, along with price tracking functions.

Price: Custom pricing based on usage.

Website: www.import.io

14. Grepsr

Businesses use Grepsr for enterprise-level web scraping tools to automate their data extraction operations. The tool presents an intuitive interface together with a basic configuration to provide quick and dependable data collection services. The tool enables simple parameter configuration for automated scrapings which enable automatic data updates at scheduled times.

Grepsr collects data through built-in quality assurance systems, which verify extracted information for accuracy and reliability. This platform provides cloud-based storage functionality together with direct business intelligence tool integration, which makes it popular for e-commerce finance and market research industries.

Key Features:

- The system performs automatic data extraction while users can set scheduling processes.

- Cloud-based storage and integration with BI tools.

- Data validation and quality assurance for accuracy.

Key Purpose:

- The primary function of Grepsr serve companies who require sustained data refreshing across e-commerce platforms together with financial sectors and market research initiatives.

Price: Starts at $129/month.

Website: www.grepsr.com

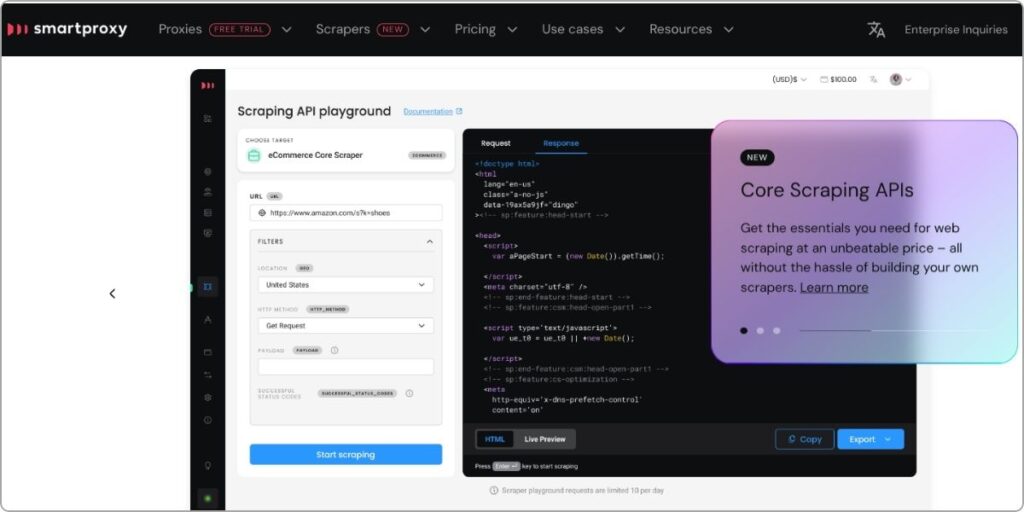

15. Smartproxy

Smartproxy operates as a proxy-based data extraction tool that helps users bypass anti-bot systems to perform data extraction tasks. The service gives users access to extensive residential mobile and data center proxy networks for scraping web pages without blocking. The tool serves businesses well for extensive data-gathering projects such as analyzing competitors and identifying emotional feedback from customers.

Smartproxy offers a dashboard and API capabilities that allow businesses to streamline their web scraping tools operations and manage them through automated solutions. The solution provides smooth data collection capabilities through its fast network and IP rotation feature which makes it a dependable scraping solution for marketing team developers and data analysts.

Key Features:

- A large pool of residential, mobile, and data center proxies.

- The system offers a fast network with IP rotation to ensure undetectable scraping operations.

- Users can access features through API in order to automate scraping tools and integrate them.

Key Purpose:

- Larger-scale data extraction benefits from the Smartproxy service because it overcomes anti-bot restriction,s which increases its value for competitor analysis and sentiment tracking operations.

Price: Starts at $12.5/month.

Website: www.smartproxy.com

Tips to Utilize Web Scraping Tools Effectively

- Understand Legal & Ethical Guidelines– Conduct a thorough understanding of both legal and ethical guidelines that apply to data scraping activities. History Crunch users must comply with website terms of service and follow regulations that include GDPR and CCPA data privacy standards.

- Use Proxies & Rotating IPs–Onionproxy.pro leads to your site’s past website detection systems, which block data Website scraping tools activities. You Need to Select the Scout

- Appropriate for Your Requirements – Review tools based on scalability together with ease of use as well as budget constraints before choosing a specific solution.

- Set Up Automated Schedules – Regular data extraction occurs through cloud-based scraping software.

- Clean & Organize Data – The extracted data must be structured without duplicates or errors before further processing. Implement a program for scraper maintenance along with regular monitoring tasks.

- Monitor and maintain your scraper– Regular updating of your scripts helps to handle modifications on target websites.

Conclusion

Web scraping tools function as core business necessities which researchers and data analysts both heavily depend on for operation fulfillment. These tools automate data procurement so users can obtain important data to carry out market evaluations financial modeling, and lead generation processes.

Responsible,e ethical scraping methods used in combination with law compliance and suitable tool selection provide organizations with maximum advantages for such operations. Web scraping development happens through AI-powered scraping solutions because these tools empower users to obtain web data in a more efficient and scalable manner. Having strong AI development skills is essential for creating, customizing, and maintaining these advanced scraping solutions.

FAQs

It Is web scraping legal?

Both ethical conditions and the service terms defined by websites authorize web scraping practices under the law. The scraping of public information is legal, though firms that scrape protected private data may face possible legal repercussions.

What should be the primary tool for beginner programmers when scraping websites through the internet?

Entry-level data professionals without programming knowledge should consider Octoparse along with ParseHub and WebHarvy as their Web data mining tools.

Can web scraping be detected?

Websites protect themselves against scraping using CAPTCHAs and bot detection mechanisms, which they combine with unwanted IP address-blocking procedures. Proxies, together with rotating user agents, make detection impossible for end users.

What programming languages are used for web scraping?

The implementation of Data extraction software relies on programmers who select particular programming languages for their projects. The web scraping operations depends on Python and its three essential frameworks: Beautifulsoup with Scrapy and Selenium and also supports JavaScript with Puppeteer and Cheerio.

The need for web scraper updates depends on which factor?

Regular website surveys serve two essential purposes since continuous website content updates result in data inaccuracies and broken commands.